API Testing in GitHub Actions: Secrets, Auth, Retries, Rate Limits

Most API test failures in GitHub Actions are not “logic bugs”. They are plumbing bugs: missing secrets, expired auth, flaky retries, and rate limits you only hit under CI concurrency.

If you want deterministic API testing in GitHub Actions, treat your workflow like production code:

- Keep test definitions diffable and reviewable in pull requests.

- Inject auth via GitHub Secrets or OIDC, never via committed files.

- Make retries explicit, scoped, and safe.

- Design for rate limits (especially when hitting the GitHub API).

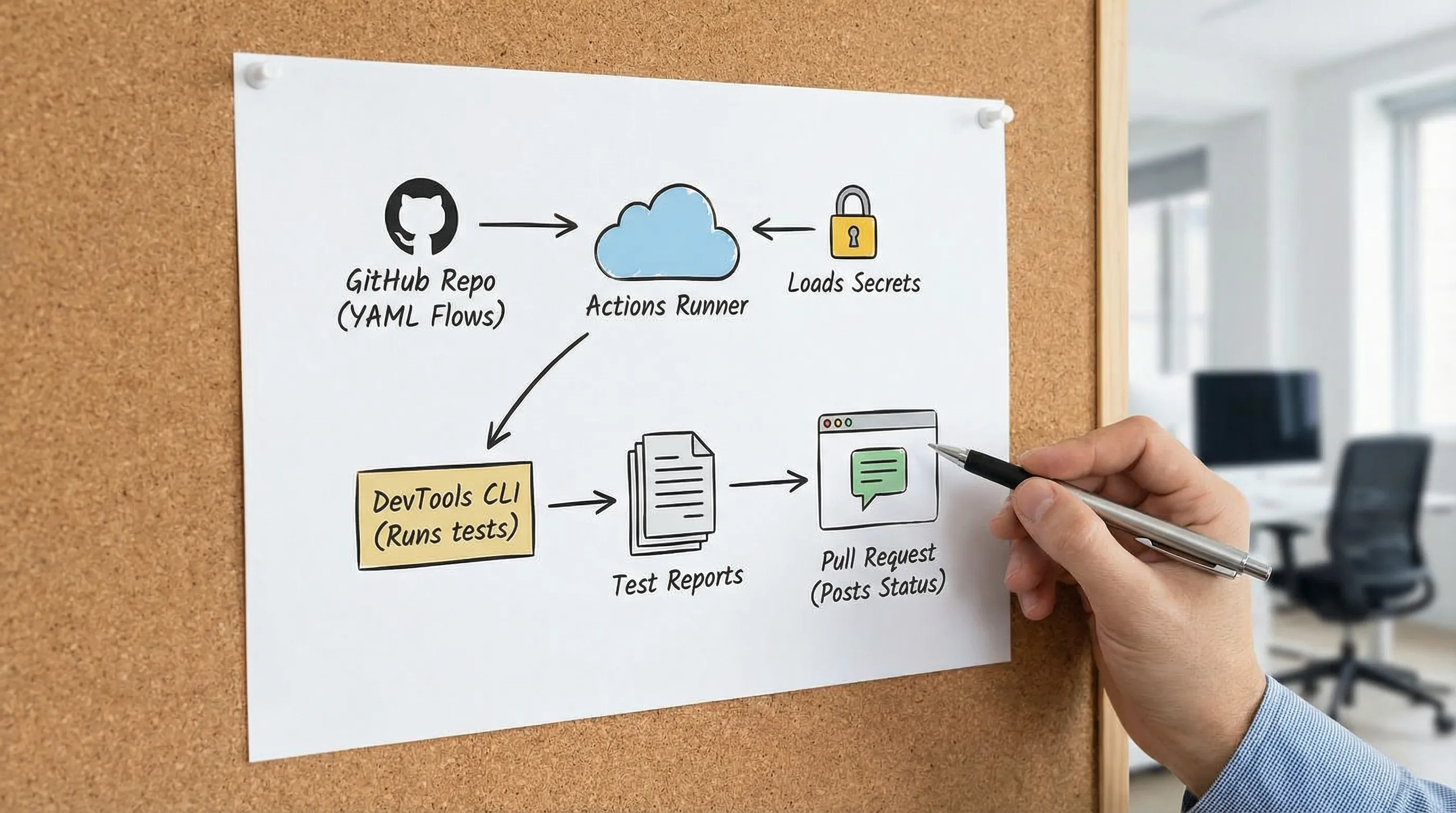

This guide focuses on YAML-based API testing stored in Git, run in GitHub Actions, with practical patterns for secrets, auth, retries, and rate limiting.

Why YAML flows in Git matter in CI (and where Postman, Newman, Bruno break down)

In GitHub Actions, your tests are run by ephemeral runners. That reality pushes you toward “tests as code”: the test artifact needs to be:

- Readable in PR review (you can reason about what changed)

- Diffable (reviewers see exactly which request/assertion changed)

- Portable (works locally and in CI without a UI export)

This is where many teams hit friction:

- Postman + Newman: collections and environments are JSON blobs. Diffs are noisy, merge conflicts are common, and the “source of truth” tends to drift between UI edits and repo exports.

- Bruno: it is file-based, but uses a custom

.bruformat (a DSL). That can be fine, but it is still a tool-specific language your team must learn and standardize. - DevTools: flows are native YAML intended to be stored in Git and reviewed like any other code. DevTools also converts real browser traffic (HAR) into executable flows, so you can start from what actually happened on the wire.

If your goal is GitHub Actions reliability, the practical win is not “more features”. It is less hidden state.

Secrets in GitHub Actions: keep credentials out of logs and out of YAML

For experienced teams, “use GitHub Secrets” is table stakes. The subtle failures come from how secrets are wired and how easy it is to leak them in logs.

Golden rules for CI secrets

- Do not commit secrets to repo files, test YAML, or example configs.

- Do not print secrets (directly or indirectly) in workflow logs.

- Prefer short-lived credentials when possible (OIDC, GitHub App tokens).

- Use least privilege: scope tokens to the smallest set of permissions and repos.

Common secret sources (and when to use each)

| Credential type | Where it comes from | Best for | Notes |

|---|---|---|---|

GITHUB_TOKEN | Auto-generated per workflow run | Calling GitHub API within the same org/repo context | Permissions are controlled via permissions: in the workflow. See GitHub docs. |

| PAT (Personal Access Token) | Stored as secrets.* | Legacy integrations, cross-org access | Prefer fine-grained PATs. Rotate regularly. |

| GitHub App installation token | Generated during workflow using App credentials | Org-wide automation with strong control | Requires App setup and JWT signing. Good for “real” automation. |

| OIDC to cloud (AWS/GCP/Azure) | GitHub OIDC federation | Calling internal APIs behind cloud IAM | See OIDC security guide. |

| Service API keys | Stored as secrets.* | Third-party APIs | Consider separate keys per environment. |

Workflow hygiene that prevents secret leaks

- Set

permissions:explicitly (don’t rely on defaults). - Avoid

set -xin bash. - Be careful with tools that echo full request headers on failures.

Here is a GitHub Actions job skeleton that is “secure by default”:

name: api-tests

on:

pull_request:

push:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

permissions:

contents: read

env:

API_BASE_URL: https://api.example.internal

steps:

- name: Checkout

uses: actions/checkout@v4

- name: Install DevTools CLI

run: |

# Follow the install method from DevTools docs for your environment

# Example placeholder:

echo "install devtools"

- name: Run API flows

env:

API_TOKEN: ${{ secrets.API_TOKEN }}

run: |

set -euo pipefail

# Keep command output deterministic; avoid printing headers/tokens

devtools run ./flows

Notes:

API_TOKENexists only in the step that needs it.- You can add environment protection rules for prod-like credentials.

Auth in CI: choosing a strategy that survives parallelism and environments

Auth is where CI realism collides with determinism.

Pattern 1: Use GITHUB_TOKEN for GitHub API calls

If your API tests call the GitHub API (for example, verifying a webhook delivery pipeline or bootstrapping fixtures), prefer GITHUB_TOKEN over a PAT where possible.

- Explicitly set permissions.

- Use GitHub’s REST endpoints and handle rate limits (covered below).

Example:

permissions:

contents: read

issues: write

Then your runner can use GITHUB_TOKEN from the environment:

env:

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

You usually do not need to define this manually, but being explicit makes intent and boundaries clearer.

Pattern 2: OIDC for internal APIs (recommended when available)

If your internal API sits behind cloud IAM, OIDC is typically the cleanest path:

- No long-lived secrets.

- Strong audit trails.

- Easy environment separation.

The key CI design idea is: mint the token during the job, then run your flows.

Pattern 3: Login request in the flow (request chaining)

For APIs that require session tokens (OAuth2 password flow, session cookies, etc.), you want request chaining:

- Request A authenticates.

- Extract token/cookie.

- Request B/C/D use it.

DevTools flows are YAML-first and commonly generated from HAR traffic, so the chain can reflect how your app actually authenticates.

A simplified example of what chaining often looks like conceptually (adapt the exact schema to DevTools flow YAML as documented):

# Example pattern only: authenticate, extract, reuse

vars:

base_url: ${API_BASE_URL}

steps:

- name: login

request:

method: POST

url: ${base_url}/auth/login

json:

username: ${API_USER}

password: ${API_PASSWORD}

extract:

access_token: $.access_token

- name: get-profile

request:

method: GET

url: ${base_url}/me

headers:

Authorization: Bearer ${access_token}

assert:

status: 200

Practical CI advice:

- Prefer client credentials or token exchange over username/password when possible.

- Keep your “login” step stable and fast. If login is flaky, the whole suite becomes flaky.

Retries: make them explicit, scoped, and safe

Retries in CI are not a moral failure. They are an engineering tool.

The mistake is retrying everything indiscriminately. Retrying non-idempotent requests can create duplicates (double charges, double writes) and makes test results harder to interpret.

Classify what you are retrying

| Failure type | Examples | Retry? | Better fix |

|---|---|---|---|

| Transient network | connection reset, DNS hiccup, 502 from gateway | Yes, limited | Add short retry with backoff; improve infra later |

| Eventual consistency | read-after-write lag, async processing | Maybe | Add polling with a timeout; assert on stable conditions |

| Rate limiting | 429, GitHub API secondary rate limits | Yes, but with backoff | Reduce concurrency; use conditional requests |

| Logic bugs | 400/422 validation errors, deterministic 500 | No | Fix code or test |

Where to implement retries

You have three realistic places to implement retries in GitHub Actions:

- In your API runner/tool (best if it supports per-request retry policies).

- In a wrapper script that reruns the suite under specific exit codes.

- In test design, by isolating the flaky boundary and polling for readiness.

A simple, controlled wrapper approach that avoids retrying forever:

- name: Run API flows (retry once on transient failure)

env:

API_TOKEN: ${{ secrets.API_TOKEN }}

run: |

set -euo pipefail

attempt=1

max_attempts=2

until [ $attempt -gt $max_attempts ]; do

echo "Attempt $attempt/$max_attempts"

if devtools run ./flows; then

exit 0

fi

attempt=$((attempt+1))

sleep 5

done

exit 1

This is intentionally blunt. For mature suites, prefer per-request policies and idempotency awareness.

Avoiding retry amplification under parallel runs

Most teams eventually parallelize tests to keep PR latency down. That is good, until retries multiply traffic:

- 20 parallel workers

- each worker retries a failing request twice

- you just tripled load right when your system is degraded

If you run DevTools flows in parallel (a common CI pattern, and one of the motivations for replacing Newman), couple it with:

- lower concurrency for endpoints with strict quotas

- backoff on 429/503

- fixtures that do not collide (unique IDs per worker)

Rate limits: design for GitHub API and for your own API

Two rate limits matter in GitHub Actions:

- Your service’s limits (global or per-token)

- The GitHub API limits (and secondary throttles)

GitHub API rate limiting essentials

GitHub’s REST API returns headers like:

X-RateLimit-LimitX-RateLimit-RemainingX-RateLimit-Reset

When you hit rate limits, you may see 403 or 429 depending on context, and GitHub may also apply “secondary rate limits”. GitHub’s documentation is the source of truth for current behavior: see Rate limits for the REST API.

If your tests exercise GitHub API endpoints (for example, provisioning repos, issues, or webhooks as fixtures), you should:

- Reuse one token per job rather than minting many tokens.

- Cache fixture state where possible (create once, reuse; or create per-branch and clean up).

- Avoid polling loops that hammer the API.

Control concurrency at the workflow level

GitHub Actions supports concurrency groups. This is a simple way to avoid multiple runs fighting for the same quota (or the same staging environment).

concurrency:

group: api-tests-${{ github.ref }}

cancel-in-progress: true

For shared staging environments, you might choose a global group instead:

concurrency:

group: api-tests-staging

cancel-in-progress: false

Control concurrency at the suite level

Even if your workflow runs once, your test runner might issue many requests concurrently.

If your suite hits rate limits, the most effective fix is usually:

- reduce parallelism for the affected flows

- split “fast read-only” checks from “write-heavy” checks

- use idempotency keys where supported

(DevTools is designed to run flows quickly and can be used in parallelized CI setups; when hitting quotas, tune parallel execution rather than blindly retrying.)

A pragmatic “rate limit budget” check

For GitHub API-heavy suites, add an early diagnostic step that checks remaining budget before you run destructive fixtures. One simple approach is calling the rate limit endpoint and failing fast if you’re near zero.

You can do this with curl using GITHUB_TOKEN, or with gh api if you install GitHub CLI. Keep the output minimal to avoid log noise and to keep tokens out of logs.

Putting it together: a CI pattern that stays reviewable

When you store flows as YAML in Git, the workflow is straightforward:

- PR changes modify YAML flows.

- Reviewers see diffs.

- CI runs the same flows deterministically.

A practical repository layout:

flows/for API flows (checked into Git)scripts/for CI wrappers (retry/backoff/fixture setup).github/workflows/for Actions workflows

And a CI job that follows the principles from this article:

name: api-tests

on:

pull_request:

concurrency:

group: api-tests-${{ github.ref }}

cancel-in-progress: true

jobs:

test:

runs-on: ubuntu-latest

permissions:

contents: read

steps:

- uses: actions/checkout@v4

- name: Install DevTools CLI

run: |

echo "install devtools"

- name: Run flows

env:

API_BASE_URL: ${{ vars.API_BASE_URL }}

API_TOKEN: ${{ secrets.API_TOKEN }}

run: |

set -euo pipefail

./scripts/run-flows.sh

This keeps the GitHub Actions YAML simple and moves “policy” (retries, limits, environment switches) into a script you can review and version.

If you want an example focused specifically on running DevTools in CI as a Newman alternative (including reporting patterns), see the DevTools guide: Faster CI with DevTools CLI.

Frequently Asked Questions

How should I authenticate to the GitHub API in GitHub Actions? Use GITHUB_TOKEN when possible, with explicit permissions:. Use a GitHub App token or fine-grained PAT only when required by cross-org or elevated access.

What is the safest way to pass API secrets to YAML-based tests? Inject secrets as environment variables from GitHub Secrets at runtime. Keep secrets out of committed YAML and avoid printing headers or request dumps in CI logs.

Should I retry API tests in CI? Yes, but only for transient failures. Keep retries limited, add backoff for 429/503, and avoid retrying non-idempotent write requests unless you have idempotency keys.

How do I handle GitHub API rate limits during tests? Reduce concurrency, avoid aggressive polling, reuse tokens, and check rate limit headers. Use workflow concurrency: to prevent overlapping runs from exhausting quota.

Why replace Postman/Newman for GitHub Actions pipelines? Postman collections/environments are JSON and often noisy in diffs and PR review. A YAML-first approach keeps tests readable and reviewable in Git, and avoids UI-locked workflows.

Run GitHub Actions API tests with Git-reviewed YAML flows

If your current setup relies on Postman/Newman exports or UI-edited artifacts, moving to Git-reviewed YAML is usually the fastest path to more deterministic CI.

DevTools is built around YAML-first flows that can be generated from real HAR traffic and executed locally or in CI. Start with the CI guide and adapt it to your auth, retry, and rate limit policies: